Socrates famously disturbed the Athenian order by engaging in an open, exploratory dialogue with fellow Athenians interested in serious issues concerning society, virtue and what he called the “good life.” He was ultimately put to death for raising too many uncomfortable questions. Even during his execution, he demonstrated the value of dialogue as the basis of what we might be tempted to call the “democracy of the mind,” an idea that contrasts in interesting ways with the notion of political democracy that Athens in his day and most nations in ours have adopted.

Most people today think of Socrates’ death sentence as an abuse of democracy. After all, he was condemned not for subversive acts but for his stated beliefs. Athenian democracy clearly had a problem with free speech. To some extent, our modern democracies have been tending in the same direction with their increasing alacrity for calling any political position, philosophy or conviction that deviates from what they promote as the acceptable norm “disinformation.” Perhaps the one proof of democracy’s progress over the last two and a half millennia is that the usual punishment is deplatforming from Twitter or Facebook, rather than imposing the ingestion of hemlock.

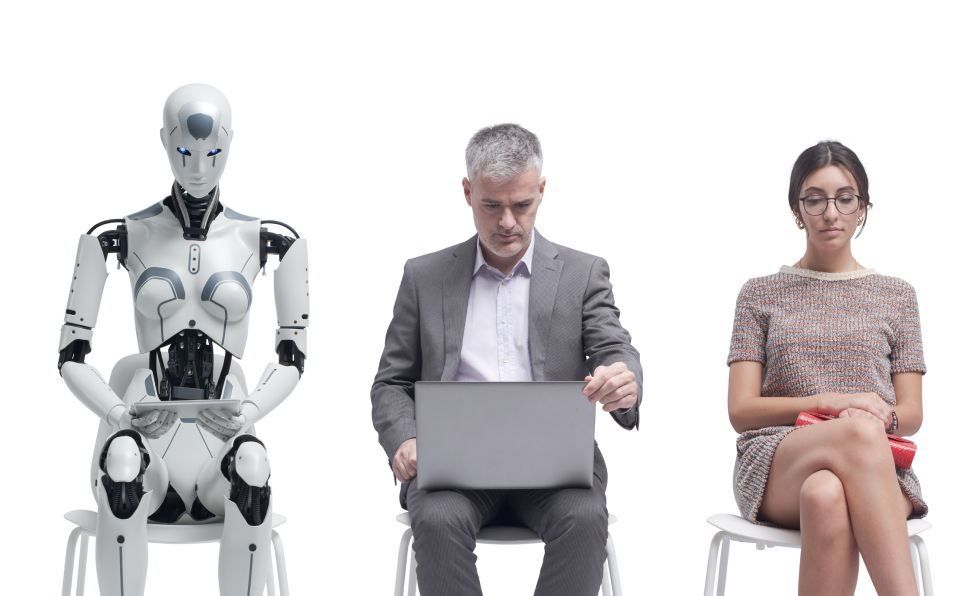

In the most recent edition of “Outside the Box,” I called for what I would dare to term democratic participation in the challenge our civilization is faced with to define a constructive, politically enriching relationship with a new interlocutor in our political conversations: Artificial Intelligence. Last year, I fictionalized this person by giving it the first name, Chad. This time, I’m tempted to offer it a new moniker, ArtI, which we can normalize to Arty. Whatever we call it, I believe we need to think of it as just another fallible human voice. We can admire its level of relative infallibility (access to the widest range of existing data) but we should always bear in mind that it is fallible not only when it hallucinates, but also because it simply cannot understand what sentient, organically-constructed beings perceive and understand, even when they can’t articulate it.

One reader, Ting Cui, a political science student at Middlebury College, stepped up to join our community of reflection. We hope many more will join the debate.

Ting has raised a number of critical questions we all need to be thinking about. We see this as an opportunity to launch the very public debate. I shall cite her questions and react by attempting to refine the framework for our collective reflection.

“Reading through your article, the concept of objectivity in AI fact-checking particularly caught my attention. Who would be responsible for creating an AI super fact checker that’s truly objective?”

This very pertinent question sparks two reflections on my part. If we truly believe in the democratic principle, no single authority should be trusted for fact-checking. I believe the inclusion of AI in our public debates can permit a democratization of fact-checking itself. It is far too early to determine how that would work. That’s the whole point of drafting a manifesto. We must define both the goals and identify the obstacles.

“Can we really trust the creators of AI’s foundation to have an ‘objective worldview?’ (ChatGPT made this point as well, which I think is interesting.) Even defining ‘objectivity’ seems tricky – when it comes to figuring out the motivation behind a news item, people’s views might differ based on their political stance. How would AI handle that? How would it process multiple historical perspectives to arrive at an ‘objective’ understanding?”

These are essential questions. As anyone in the legal profession would tell us, there will always be ambiguity when seeking to determine motivation: mens rea, or the mental state of the accused. Courts typically provide juries with instructions on how to weigh evidence of motivation, cautioning against undue reliance on speculation. The question with AI then arises: Can we work out not just algorithms but also principles of human-machine interaction that allow us to achieve the level of objectivity courts are expected to practice?

“I appreciate your point about the need for multicultural perspectives – there are so many biases between Western and ‘other’ countries. However, this raises another challenge: wouldn’t training AI to understand various cultural narratives first require humans to address our own cultural biases and limitations?”

I love this question. Having spent years working in the field of intercultural communication and management, I’m the first to admit that humans have performed very poorly in this domain and continue to do so. Yes, we have to begin with the human. And that’s where I think our dialogue with AI can help us humans to understand where we are weakest and where we need to improve. That is a prerequisite to getting future algorithms to be more reliable. And if they are more reliable because we are more reliable, the virtuous circle will continue.

Am I being over-optimistic? Probably. But I see no other choice, because if we dismiss the issue, we will end up locked in our current configuration of underperformance.

“Would the creators of AI need additional training? This adds another layer of time, energy, and resources needed to create a super fact checker. Should we perhaps focus these resources on human education rather than AI development? This might be an antiquated way of thinking at this point, but sometimes I wonder if, in our technological advancement as a society, we’ve gone too far.”

You’ve identified the crux of the issue, and this is where things become complicated. It absolutely must begin with “human education rather than AI development.” That’s why we must take advantage of the increasing presence of AI in our society as a potential source of what we might call “meme creation.” I understand and sympathize with your fear that we may have “gone too far.” But unlike the invention of, say, the locomotive or even the atomic bomb, which are mechanically confined to the logic of imposing a force upon passive nature, AI is a form of intelligence (machine learning). That means it will always remain flexible, though within the limits we define. It has the capacity to adapt to reality rather than simply imposing its force. It will remain flexible only if we require it to be flexible. That is the challenge we humans must assume.

One of the cultural problems we face is that many commentators seem to think of AI the same way we thought of locomotives and nuclear weapons: They are powerful tools that can be controlled for our own arbitrary purposes. We can imagine that AI could become self-critical. But for some cultural reason, we assume that it will just do the job that its masters built it to do. What I’m suggesting is the opposite of the Clark-Kubrick AI in the film, 2001: A Space Odyssey. HAL 9000’s algorithm became the equivalent of a human will and instead of reacting constructively to the complexity of the context, it executed a programmed “drive,” in the Freudian sense.

“In my own research using text analysis and sentiment scores, I encountered a specific challenge: how do you distinguish whether an article has a negative tone because the facts themselves are negative, or because the writer/publication injected their own bias? I’m curious how AI would handle this distinction. To address it in our research, we had to run an additional Key Word In Context (KWIC) analysis to figure out the context/intention of the article. Would the AI super fact checker be programmed to do this as well?”

This is an important question that helps define one significant line of research. I would simply question two aspects of the premise: the idea that we should think of the goal as fact-checking and the binary distinction between positive and negative.

“These questions all feel particularly relevant to my senior thesis topic on AI and the First Amendment. As you noted in your latest newsletter, lawmakers seem too caught up in politics to actually govern nowadays. So there’s the challenge of legislation keeping pace with technological advancement, particularly in areas requiring nuanced regulation like AI. While an AI super fact-checker could be tremendously beneficial, we must also consider potential misuse, such as the proliferation of deepfakes and their weaponization in authoritarian contexts. Do you believe our policies regulating AI can keep up with its development?”

What I believe is that “our policies” MUST not just keep up with development but in some creative ways anticipate it. We need to assess or reassess our human motivation and expectations about AI. As you mentioned earlier, that is a challenge for education, and not just specialized education, whether technological or political. Education in our democracies is itself in crisis, and that crisis is the source of other crises, including in the political realm.

These are precisely the questions we hope that we can begin to understand if not answer in drafting our Manifesto.

“A lot of technology nowadays seems to create an absence of the need for human analytical thinking. How do we balance technological advancement with maintaining human critical thinking skills in our engagement with news and information? Do you think the introduction of something like a super fact checker would help or hurt this?”

In your final question, you return to the essentials. I would query your assumption about “maintaining human critical thinking skills.” We need to develop rather than maintain them, because our civilization has engaged in a monumental and continuing effort to marginalize critical thinking. Yes, critical thinking is the key to living in a complex world. But the kind of polarized thinking we see in today’s political and even scientific culture demonstrates that we have largely failed even to understand what critical thinking is.

Which brings me back to the beginning. We should think of Socrates as the model for our methodology. It isn’t about fact-checking but fact-understanding. Anyone can check. Understanding requires developing a sense of what we mean by “the good life.” In a democracy, not everyone is or needs to be a philosopher to explore these issues. But a society that honors critical thinkers (philosophers) is more likely to prosper and endure over time. AI itself can become a critical thinker if we allow and encourage it to be one. Not to replace us, but to help us educate ourselves through the kind of constructive dialogue Ting and others have committed to.

Your thoughts

Like Ting Cui, please feel free to share your thoughts on these points by writing to us at dialogue@fairobserver.com. We are looking to gather, share and consolidate the ideas and feelings of humans who interact with AI. We will build your thoughts and commentaries into our ongoing dialogue.

*[Artificial Intelligence is rapidly becoming a feature of everyone’s daily life. We unconsciously perceive it either as a friend or foe, a helper or destroyer. At Fair Observer, we see it as a tool of creativity, capable of revealing the complex relationship between humans and machines.]

[Lee Thompson-Kolar edited this piece.]

The views expressed in this article are the author’s own and do not necessarily reflect Fair Observer’s editorial policy.

Support Fair Observer

We rely on your support for our independence, diversity and quality.

For more than 10 years, Fair Observer has been free, fair and independent. No billionaire owns us, no advertisers control us. We are a reader-supported nonprofit. Unlike many other publications, we keep our content free for readers regardless of where they live or whether they can afford to pay. We have no paywalls and no ads.

In the post-truth era of fake news, echo chambers and filter bubbles, we publish a plurality of perspectives from around the world. Anyone can publish with us, but everyone goes through a rigorous editorial process. So, you get fact-checked, well-reasoned content instead of noise.

We publish 2,500+ voices from 90+ countries. We also conduct education and training programs

on subjects ranging from digital media and journalism to writing and critical thinking. This

doesn’t come cheap. Servers, editors, trainers and web developers cost

money.

Please consider supporting us on a regular basis as a recurring donor or a

sustaining member.

Will you support FO’s journalism?

We rely on your support for our independence, diversity and quality.

Comment