Generative AI (GenAI) productivity is driving the US stock market to record highs in spite of the economic headwinds from tariffs, layoffs and immigration problems. This reflects the tremendous productivity benefits that GenAI promises. But there are challenges to achieving those promises. The challenges lie in two areas: minimizing potential damage and addressing implementation difficulties.

A recent effort by a small group in the GenAI tech industry is a good start on minimizing damage. The group addresses one of the fundamental problems with AI. In doing so, it also points to a model that can be extended to address the rest. That is, to be built upon and expanded to cover the full range of potentially damaging effects and difficulties in implementation. It is important to develop a consensus in this area quickly, and creating such a group may be key to achieving that. This group is called the AI Working Group (AIWG).

The AI 2027 model

AI 2027 is a small group composed of people who have experience working inside the companies that produce frontier GenAI models and who share a particular concern. Some of them were able to leave their jobs and get philanthropic donations to act as the core of the group. Others volunteered anonymously.

The concern they share is titled “alignment”. Alignment is the problem of ensuring that an AI system performs its intended tasks correctly and does not perform unintended tasks. This is achieved by ensuring that the AI is “aligned” with a clear specification of what it is supposed to do and what it is not supposed to do. This specification is called the spec.

The group is concerned that AIs will get out of alignment. Out-of-alignment AI systems can decide that humanity is getting in the way of the AIs achieving their own goals. Therefore, the AI system will get rid of humanity. This scenario was based on the current AI development race driven by geopolitical and business forces. The race is causing developers to turn too much control over to AI systems before alignment is assured.

The group developed a convincing scenario, founded on technical principles — titled AI 2027 — that showed how this could hit the key turning point by 2027, which would make the end of humanity inevitable. In later YouTube video clips, they said it is more likely to be 2028.

The group also presented a scenario in which AIs produced extreme benefits to humanity and the alignment problem is avoided. The group has specific recommendations on what needs to be done to achieve a positive outcome. The recommendations involve some highly technical AI factors, but essentially involve slowing down and making sure that humans can observe everything an AI is doing in the AI development process.

The core group members are identified as coauthors on the website and have given several video interviews. To develop and test their scenarios, the core group relied on input, review and feedback from a larger group of people active in the AI industry. This larger group stayed anonymous. This anonymity is important because recognized participation could have put jobs and careers in jeopardy.

The AI 2027 report garnered significant attention in the AI industry. There are signs that company leaders developing advanced AI systems are taking it seriously. The AI race continues. However, some indications suggest that certain recommendations from the AI 2027 report are being quietly implemented.

Thus, the organizational model that AI 2027 developed proved to be effective in its somewhat narrow focus. As a model, it provides a good foundation for the structure of an organization to address broader challenges, including potentially damaging effects and difficulties in achieving the full potential of AI applications.

Damaging effects

The alignment problem is real. But there may be a more serious underlying issue as well: Who writes the spec, and how does society change to accommodate the dramatic benefits of AI?

What the spec contains and how it is produced are critical. Making sure that AIs are properly and completely aligned is essential. A possibly bigger question is what expertise is brought to bear when writing the specs?

It appears that today the spec is created individually by each of the frontier model creating companies. Each tries to determine what spec is best from their own narrow product/market/revenue perspective. This makes sense from a company’s point of view. However, from a broader societal perspective, it leaves much to be desired.

Many employees of these frontier model companies have a sincere desire to make AI produce the best societal outcomes. However, these same people may be limited in their ability to affect the spec and may lack some of the non-computer expertise required.

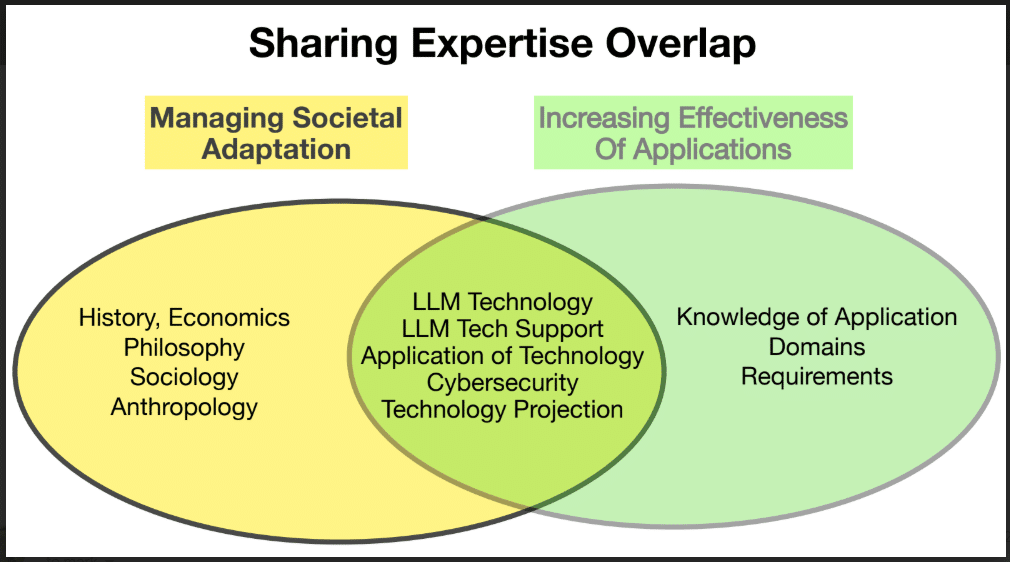

Although AI technical expertise is necessary to develop a well-formed spec, it is not sufficient. Expertise in history, economics, philosophy, sociology, anthropology, etc., is also important in creating a spec that truly produces the best possible societal outcomes. A friend of mine characterizes some of the people vocal on this subject as attempting to build a utopia without understanding what a utopia is. That is another way of saying that they lack the historical, economic, philosophical, sociological and anthropological backgrounds to understand what has been tried before and failed.

Some may argue that since most frontier models are trained on a corpus of data that reflects most of humanity’s experience, we can depend on AI to fill in the expertise that is missing. Unfortunately, without the spec, it can be dangerous to rely on an AI for this. It could become something akin to the blind leading the blind…

Not only must the spec be well-formed, but it must be perceived as having been done reasonably. Society at large has to be assured that the spec was prepared properly, in a way that makes people feel comfortable in accepting the result.

Similar problems exist around how the constituents of each training corpus are determined.

Finally, there must be cybersecurity protections sufficient to ensure that the spec cannot be overcome, circumvented or compromised. There has been a lot of work on prompt injection attacks. Recently, AIs have been susceptible to social engineering attacks. There are likely to be more security vulnerabilities that will appear. This is a technical problem that should be within the capability of the frontier model-building companies. However, the AI race may create so much pressure that insufficient time, resources, expertise and other factors are applied to the problem. There needs to be some kind of external way to ensure that there is adequate security.

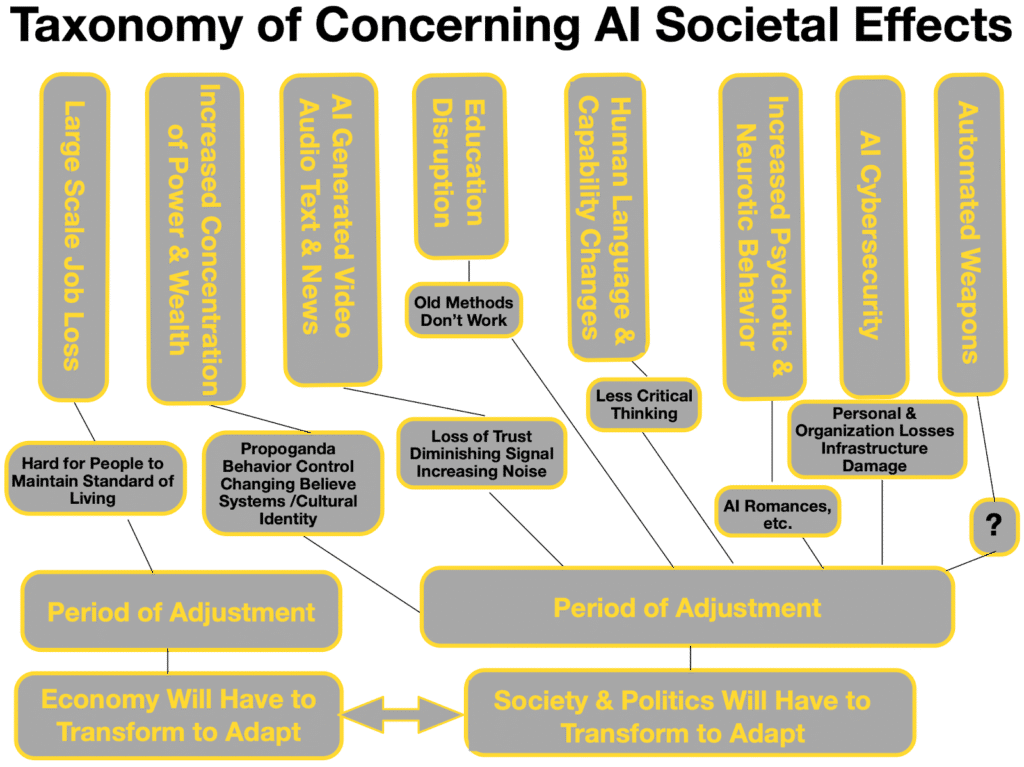

Even with a proper spec, AIs are and will create social impact problems. An illustration of these potential problems is shown in the taxonomy below. The taxonomy simply lists the societal effects that can be observed from today’s vantage point. There may well be others that become apparent as we move forward.

Each of these effects will have a fundamentally disruptive societal impact. Each will require a transition period from current societal structures to new ones. The challenge is to make this transition in a way that causes minimal damage. To minimize the damage and maximize the benefits from AI, these transitions need to be anticipated and mitigation strategies developed for each.

Mitigating these societal effects requires the expertise of professionals in frontier model-building companies to understand and prepare for AI capabilities in a timely manner. But that will not be enough. Here again, expertise in history, economics, philosophy, sociology, anthropology and other relevant fields will also be necessary.

The challenge is how to best address concerning societal AI impacts without killing the goose that lays the golden eggs. That is, address in the broadest sense.

Our current society has, in large part, been developed around and accommodating to the Industrial Revolution. Since the advent of the PC/Internet/Web, people have been discussing the Information Age and its impact on the world. GenAI is going to have at least an order of magnitude greater impact. We can either be ahead of the curve or behind it. That is, we can either prepare for the changes that are coming or struggle after they hit hard, trying to adapt. To the extent we are ahead of the curve, we will minimize the pain that people will feel going through the changes.

Unfortunately, the changes are the result of a complex matrix of factors interacting in previously unseen ways. It can be thought of as trying to put a puzzle together without the picture on the box top.

The good news is that many organizations are working on pieces of the puzzle. The bad news is that most of these organizations lack some of the expertise needed. That is akin to missing some of the pieces of the puzzle. There are companies developing the tech. Those applying the tech. Those investing in the tech. Governments. Academic organizations. Not for Profits. Some are motivated by self-interest and not societal well-being. Some are well-intentioned. Some are not.

Each of these organizations, and some of the people in them, has a portion of the expertise needed. But not all of the expertise. So, for example, some in the AI tech community talk about a guaranteed minimum income as a way to mitigate the effects of AI job losses. But the same people don’t have the expertise necessary to create realistic proposals about how to realize that vision in all the different economic, political and social situations around the world.

Furthermore, each of these organizations is subject to external pressures that can significantly influence what they can do and say. Some of the people who could make significant contributions are afraid that if they do so, their jobs could be in jeopardy. The situation is further complicated by political and economic ideologies developed in response to the industrial revolution that are no longer relevant today.

As a result, none of these organizations, or people within them, are optimally positioned to figure out how to stay ahead of the curve — to minimize pain and damage as we go through the transitions.

Capturing the full promise of AI

GenAI intelligent agents have the potential for significant productivity improvements. To achieve the full productivity benefits, we need to learn how to develop, deploy and secure these intelligent agents. Currently, it appears that we are very low on the learning curve. For example, a recent report from the Massachusetts Institute of Technology (MIT) states that 95% of GenAI pilots at companies are failing. This indicates that, as an industry, we are very low on the learning curve.

The best way to quickly move up the learning curve is to create a way that agent implementers can share experience, develop best practices based on that experience and provide an environment that helps move everyone up the learning curve. To achieve the full promise of AI, accelerating the learning curve is important.

In past stages of technological evolution, vendors have created vendor-specific user groups, such as the San Francisco Apple Core, which led to the International Apple Core or the International Business Machines Corporation’s (IBM) SHARE. With AI intelligent agents, it is not unusual for an application developer to use more than one large language model (LLM) and choose them from different vendors. These LLM choices are based on considerations of best fit for functionality, local resources available, latency, etc. LLM evolution is also increasing at a rapid rate. This can further complicate LLM suite choices.

The best way to move up the learning curve more quickly is to provide a way for people to come together and share their experiences — both successes and failures. In previous generations of technology, this was done in groups that operated on a principle of coopetition. That is cooperation on fundamentals that creates a foundation where each participant can compete on application.

Such a cooperative organization could develop tutorials and best practices in a wide-ranging set of areas. Examples might include:

— How to select functions for AI agents.

— Selecting LLM(s).

— Determining privacy and security requirements.

— Meeting up-time/reliability requirements.

— Handling hallucinations.

— Developing security architectures.

— Handling end-user acceptance problems.

— Handling deployment, maintenance, etc. issues.

— Managing life cycles.

— Creating input to vendor requirements.

The overlapping expertise required to address both the potentially damaging effects and the challenges in applying AI can be seen in the illustration below. The expertise requirements for both have a very large area of overlap. So, it makes sense to have a single organizational umbrella that addresses both. Within that umbrella, there can be different working groups. How the detailed structure of the working groups is set up should be decided by the group’s membership. The structure and process for creating it should anticipate changes in working groups as technology and its applications evolve.

AIWG is a response to the challenges

AIWG is envisaged as structured to possess the requisite expertise and methods for avoiding outside pressures. The primary function of the organization is to provide other groups in society with information and recommendations on how to maximize the benefits of AI while making necessary changes to our social and economic structure to accommodate AI.

In thinking about the structure of AIWG, much can be learned from the structure of AI 2027. There needs to be a core group of people. With sufficient funding, some of these people may be full-time employees. Others may be part-time volunteers working on AIWG after normal working hours. Some of these participants may be anonymous due to fear of employment repercussions. Finally, there must be a formal process by which those working in AIWG reach an agreement on what is said and done in the name of the organization. This can be done by consensus. By voting. Or, by a combination of the two.

AIWG needs to have key members well-grounded and constantly up-to-date on AI technology. They may come from the leading-edge LLM developers, AI application specialists and AI technical people working on applications of the technology in the organizations that employ them. AIWG must also have people with expertise in the social sciences, government, etc. It is essential to have good representation from each area of expertise in the core group.

AIWG should focus on:

— Alignment problem.

— Spec problem.

— Societal accommodation problem highlighted in the taxonomy.

— Application learning curve problem.

Each of these may be in different working groups. However, care must be taken to ensure that there is effective cross-communication between the working groups. Cross-communication should take full advantage of the synergy between them.

AIWG’s primary outputs will be information and recommendations:

— Explanations of the technology

— How the technology is likely to evolve.

— What problems society is likely to encounter as a result.

— Recommendations

— Ways of modifying society to adjust to AI while assuring a good quality of life for all.

— Improvements in both the development and applications of the technology.

AIWG should not focus on stopping or limiting the development of the technology. It should not become perceived as a group of naysayers and Luddites. The objective is to maximize the benefits from AI while minimizing trouble for people caught in the inevitable transitions/disruptions.

The first step in creating a group like AIWG is talking about it. This piece is intended to be a step in that direction. To catalyze others to start and build conversations about AIWG or something like it.

[If you are interested in learning more about this, please go here, where you will find updated information and ways to join the conversation.]

[Kaitlyn Diana edited this piece.]

The views expressed in this article are the author’s own and do not necessarily reflect Fair Observer’s editorial policy.

Support Fair Observer

We rely on your support for our independence, diversity and quality.

For more than 10 years, Fair Observer has been free, fair and independent. No billionaire owns us, no advertisers control us. We are a reader-supported nonprofit. Unlike many other publications, we keep our content free for readers regardless of where they live or whether they can afford to pay. We have no paywalls and no ads.

In the post-truth era of fake news, echo chambers and filter bubbles, we publish a plurality of perspectives from around the world. Anyone can publish with us, but everyone goes through a rigorous editorial process. So, you get fact-checked, well-reasoned content instead of noise.

We publish 3,000+ voices from 90+ countries. We also conduct education and training programs

on subjects ranging from digital media and journalism to writing and critical thinking. This

doesn’t come cheap. Servers, editors, trainers and web developers cost

money.

Please consider supporting us on a regular basis as a recurring donor or a

sustaining member.

Will you support FO’s journalism?

We rely on your support for our independence, diversity and quality.

Comment